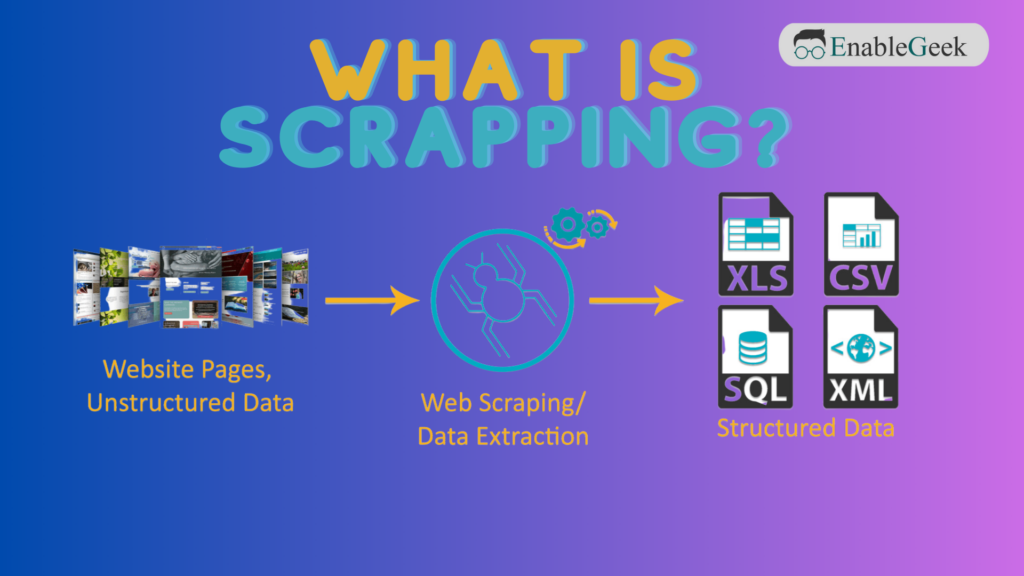

The Basics of Data Scraping

Data scraping, also known as web scraping or data harvesting, is the process of extracting data from websites or other online sources. It involves automating the retrieval of information and transforming it into a structured format for further analysis or use. Data scraping has become an essential technique for various purposes, including market research, competitive analysis, data aggregation, and more.

Here are some key points to understand about the basics of data scraping:

- Purpose: Data scraping allows you to gather large amounts of data from different sources efficiently. It helps in automating the collection of information that would otherwise be time-consuming and labor-intensive to obtain manually.

- Techniques: There are multiple techniques for data scraping. The most common approach involves accessing websites’ HTML code and extracting relevant data using parsing methods. This may involve navigating through HTML tags, identifying data elements, and applying appropriate parsing techniques like regular expressions or using specialized libraries.

- Legal and Ethical Considerations: While data scraping offers valuable insights, it’s crucial to respect legal and ethical boundaries. Websites may have terms of service or usage policies that prohibit scraping their content. It’s important to review the website’s policies and consider obtaining permission or using public APIs if available. Additionally, being mindful of data privacy and protecting personal information is essential.

- Tools and Libraries: Various programming languages offer libraries and frameworks specifically designed for data scraping. For example, Python has popular libraries like Beautiful Soup, Scrapy, and Selenium that provide functionalities to fetch web pages, extract data, and navigate through website structures.

- Handling Dynamic Content: Many websites use dynamic content generated by JavaScript or AJAX, which requires additional techniques to scrape effectively. Tools like Selenium can automate interactions with web pages, allowing you to scrape data from dynamic elements.

- Data Cleaning and Structuring: Extracted data may require cleaning and structuring to make it usable. This involves removing irrelevant or redundant information, handling missing values, standardizing formats, and organizing the data into a structured format like CSV, JSON, or a database.

- Scalability and Performance: When scraping large amounts of data or multiple websites, considerations should be made for scalability and performance. Techniques like asynchronous scraping, distributed systems, and efficient data storage can help manage and process large-scale scraping tasks.

Data scraping is a powerful technique for extracting valuable insights and automating data collection from online sources. By understanding the basics and following legal and ethical guidelines, you can leverage data scraping effectively for various applications in business, research, and analysis.

Understanding the Legality and Ethics of Data Scraping

In today’s digital age, data has become a valuable resource for businesses, researchers, and individuals alike. As a result, the practice of data scraping, or web scraping, has gained prominence. Data scraping refers to the automated process of extracting information from websites, often for purposes such as market research, data analysis, or competitive intelligence. However, the legality and ethics of data scraping are subjects of ongoing debate. This article aims to provide insights into the legal and ethical considerations surrounding data scraping, accompanied by examples and resources for further exploration.

Legal Considerations: The legality of data scraping varies across jurisdictions and is influenced by factors such as the nature of the scraped data, the methods employed, and the website’s terms of service. Some countries have specific laws that regulate data scraping, while others rely on existing intellectual property or computer misuse laws. For instance, the United States’ Computer Fraud and Abuse Act (CFAA) has been used in legal cases to prosecute unauthorized access to websites through data scraping. On the other hand, some countries, like Germany, have stricter regulations in place to protect personal data, making scraping activities more challenging.

Ethical Considerations: While something may be legal, it doesn’t necessarily mean it is ethically justified. Ethical concerns arise in data scraping due to issues such as privacy invasion, intellectual property infringement, and disruption of website operations. For instance, scraping personal information without consent or scraping copyrighted content may raise ethical red flags. Moreover, aggressive scraping practices that overwhelm servers and impact website performance can be deemed unethical.

Examples of Data Scraping Cases:

- HiQ vs. LinkedIn: In 2019, LinkedIn attempted to block HiQ, a data analytics company, from scraping public profiles. The case went to court, and the U.S. Ninth Circuit Court of Appeals ruled in favor of HiQ, stating that publicly available data cannot be monopolized.

- Facebook and Cambridge Analytica: The Cambridge Analytica scandal revealed how data scraping, combined with unauthorized data sharing, can have significant privacy implications. The incident highlighted the ethical concerns surrounding the collection and use of personal data.

Resources for Further Exploration:

- “Web Scraping and Data Protection Laws” – Article by European Data Protection Board: Link: https://edpb.europa.eu/sites/edpb/files/files/file1/edpb_guidelines_202007_web_scraping_en.pdf

- “Web Scraping: Legal or Not?” – Article by Brian Powers, Attorney at Law: Link: https://www.brianpowerslaw.com/web-scraping-legal-or-not/

- “The Ethics of Web Scraping” – Blog post by Datahut: Link: https://www.datahut.co/blog/the-ethics-of-web-scraping/

Conclusion: Data scraping is a powerful tool for extracting valuable insights and driving innovation. However, it is essential to navigate the legal and ethical landscape surrounding this practice. Understanding the jurisdiction-specific laws, respecting website terms of service, obtaining proper consent, and ensuring responsible and ethical scraping practices are crucial steps for businesses and individuals engaged in data scraping. By being aware of the potential legal implications and ethical considerations, stakeholders can harness the benefits of data scraping while upholding the rights and privacy of individuals and organizations.

Best Practices for Web Scraping

Web scraping has emerged as a vital technique for extracting valuable data from websites, enabling businesses and researchers to gain actionable insights. However, in an era where data privacy and ethical considerations are paramount, it is crucial to approach web scraping responsibly. This article will explore the best practices for web scraping, empowering practitioners to leverage this powerful tool while respecting legal and ethical boundaries.

- Obtain Legal Permission: Before engaging in web scraping, it is essential to ensure that you have legal permission to access and extract data from the target website. Review the website’s terms of service or consult legal experts to understand any restrictions or requirements. Respect copyright laws, intellectual property rights, and applicable data protection regulations specific to your jurisdiction.

- Identify Target Websites Ethically: Selecting the right websites for scraping is crucial. Target public websites or those that explicitly allow data scraping through their terms of service or APIs. Avoid scraping private or password-protected websites, as doing so may violate legal and ethical boundaries. Additionally, respect website administrators’ requests to refrain from scraping their content.

- Use Respectful Scraping Techniques: Employ scraping techniques that are respectful to the target website’s resources and operations. Avoid overwhelming servers with excessive requests or aggressive scraping practices. Implement measures such as time delays between requests, randomize scraping patterns, and use efficient scraping libraries or frameworks to minimize server load. Responsible scraping ensures that the website remains functional for its intended users.

- Respect Robots.txt and Crawl-Delay: Robots.txt files are publicly available on most websites and serve as a guide for web crawlers. Pay attention to the directives mentioned in these files to determine which sections of the website are open for scraping. Adhere to crawl-delay instructions, which specify the time intervals between requests. Respectfully following robots.txt guidelines demonstrates good scraping etiquette.

- Anonymize Requests and User Agents: To maintain anonymity and reduce the risk of being blocked or detected, consider rotating IP addresses or using proxy servers. This practice prevents websites from linking scraping activities to a single source. Additionally, customize your user agent strings to mimic popular web browsers, as some websites may block requests from unfamiliar user agents.

- Respect Privacy and Personal Data: Ensure that your scraping activities prioritize user privacy and adhere to data protection regulations. Avoid scraping personally identifiable information (PII) without explicit user consent. Be cautious when handling sensitive data and employ appropriate security measures to protect the data you collect.

- Monitor and Adapt: Regularly monitor the websites you scrape to identify any changes in their structure or terms of service. Websites may update their policies, restrict access, or introduce anti-scraping measures. Stay informed and adapt your scraping techniques accordingly to maintain compliance and avoid potential legal issues.

Web scraping offers immense potential for data-driven decision-making, market research, and innovation. However, it is essential to approach scraping responsibly, keeping legal and ethical considerations at the forefront. By obtaining legal permission, respecting website terms of service, employing responsible scraping techniques, and prioritizing user privacy, practitioners can harness the power of web scraping while maintaining trust and integrity in the digital ecosystem. Embracing these best practices will enable a harmonious balance between data extraction and ethical data usage, ensuring a sustainable and responsible approach to web scraping.

Introduction to Web Scraping Libraries and Frameworks

Web scraping is a powerful technique for extracting data from websites, and there are several libraries and frameworks available that make the process more efficient and straightforward. These tools provide functionalities to fetch web pages, parse HTML or XML content, and extract data from structured or unstructured sources. Here are some commonly used web scraping libraries and frameworks:

- Beautiful Soup: Beautiful Soup is a popular Python library for web scraping. It simplifies parsing HTML and XML documents, allowing you to extract data by navigating through the document’s structure. Beautiful Soup provides a convenient API for searching and manipulating parsed data, making it a widely used choice for beginners and experienced scrapers alike.

- Scrapy: Scrapy is a powerful and extensible web scraping framework written in Python. It provides a full-featured ecosystem for building scalable and efficient web scraping applications. Scrapy handles various aspects of the scraping process, such as making requests, handling cookies and sessions, and parsing responses. It also supports asynchronous scraping and allows you to define flexible crawling rules.

- Selenium: Selenium is a web automation framework that is often used for scraping websites with dynamic content. Selenium allows you to automate interactions with web pages, including clicking buttons, filling forms, and waiting for page updates. It is particularly useful for scraping JavaScript-driven websites or those that rely heavily on user interactions.

- Puppeteer: Puppeteer is a Node.js library that provides a high-level API for controlling headless Chrome or Chromium browsers. It allows you to automate web browsing and scraping tasks by simulating user interactions. Puppeteer is well-suited for scraping websites that heavily rely on JavaScript and require rendering of dynamic content.

- Requests-HTML: Requests-HTML is a Python library that combines the simplicity of Requests with the power of HTML parsing. It provides an intuitive interface for making HTTP requests and parsing HTML content. Requests-HTML supports various parsing techniques, including searching for elements using CSS selectors and handling JavaScript-rendered pages.

- Apify: Apify is a web scraping and automation platform that offers a range of features and tools. It provides a user-friendly interface for building and managing scraping tasks, handling proxies and CAPTCHAs, and storing the scraped data. Apify supports both headless browser scraping and simple HTTP request-based scraping.

- Goutte: Goutte is a PHP web scraping library built on top of the Guzzle HTTP client. It simplifies the process of making HTTP requests, parsing HTML content, and extracting data from websites. Goutte provides an expressive API for navigating and querying HTML elements.

These are just a few examples of the many web scraping libraries and frameworks available. When choosing a library or framework, consider factors such as the programming language you are comfortable with, the complexity of the scraping task, the website’s structure and dynamics, and the specific features and functionalities you require.

Using these libraries and frameworks can significantly streamline your web scraping process, handle common scraping challenges, and provide robust solutions for extracting data from websites efficiently.

Data Scraping vs. APIs: Pros and Cons

Data acquisition is a fundamental aspect of many applications and projects, and two common methods for accessing data are data scraping and APIs (Application Programming Interfaces). Both approaches offer distinct advantages and limitations, and understanding their pros and cons is essential when choosing the appropriate method for your specific use case. Here is an overview of the pros and cons of data scraping and APIs:

Data Scraping: Pros:

- Data from Any Source: Data scraping allows you to extract data from any website or online source, regardless of whether an API is available.

- Flexibility and Customization: With data scraping, you have more control over the data extraction process. You can tailor the scraping logic to extract specific data points or traverse complex website structures.

- Real-time Data: Data scraping enables you to access the most up-to-date information available on a website, which can be crucial for applications that require real-time or near-real-time data.

Cons:

- Legality and Ethical Considerations: Data scraping can raise legal and ethical concerns, as websites may have terms of service that prohibit scraping or require explicit permission.

- Lack of Structure: Scraped data is often unstructured and may require additional processing and cleaning before it can be used effectively.

- Fragile Scraping Logic: Websites frequently update their structure or layout, which can break scraping scripts. Regular maintenance is required to keep the scraping logic up to date.

APIs (Application Programming Interfaces): Pros:

- Structured Data: APIs provide structured data in a consistent format, making it easier to work with and integrate into applications.

- Officially Supported: APIs are typically maintained by the data source itself, ensuring reliability and ongoing support.

- Security and Authentication: APIs often require authentication, providing a secure and controlled method of data access.

Cons:

- Limited Data Availability: Not all data sources offer APIs, limiting the range of accessible data.

- Potential Cost: Some APIs may have usage limits or require paid subscriptions, adding to the cost of accessing data.

- Data Quality and Timeliness: APIs may not provide real-time data, and the quality or depth of data can vary between different providers.

In summary, data scraping offers greater flexibility and the ability to extract data from any source but comes with legal and ethical considerations. APIs provide structured data, official support, and enhanced security but may have limited availability and potential cost implications. The choice between data scraping and APIs depends on factors such as the specific data source, data requirements, legal and ethical considerations, and the level of control and customization needed for the project.