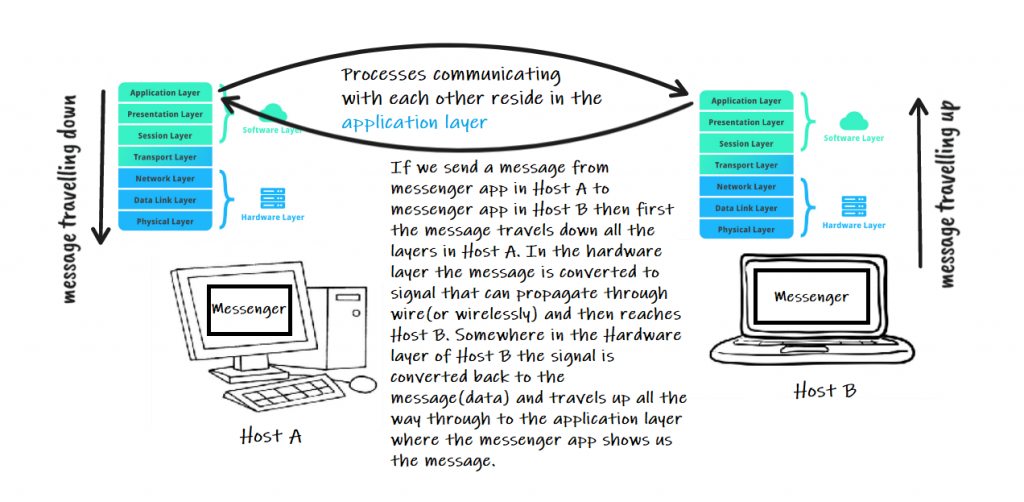

The whole purpose of network programming is that your program can talk to a program on another machine. It does not matter if you have implemented the Client-Server Model or the Peer-to-Peer Model. The end goal is that your program can request something from a program that is in another machine. For example, in Client-Server architecture part of your app must run on a server, while another part (usually a client) must run on a different machine.

We previously stated that communication for a network application occurs between end systems at the Application Layer. Before you can build your network application, you must first understand how programs running on different end systems communicate with one another. After all, if parts of our network application can not communicate with other parts, it’s not really a network application.

In this tutorial, let us look at how programs on different hosts communicate with each other. As a Network Application Developer, you do not have to be worry or care about all the low-level networking details. In this tutorial, we will only cover the concepts that you will need to create a Network Application in general.

What Is A Process?

When we say programs in the context of Network Programming we really mean processes. In Operating Systems, a process is a program that has been loaded into the memory and currently running or being executed by the central processing unit (CPU). It has its own memory space and system resources. A process is regarded as a unit of execution in the operating system context. A process is a basic entity to which OS allocates resources(such as memory and open files). A process can read and write to these resources as needed, but it is not allowed to access or modify resources that are not allocated to it. It is important to note that when a process is terminated, all the resources allocated to it are freed up and can be used by other processes.

In the jargon of operating systems, processes communicate rather than programs. Before telling you more about how processes can communicate let us tell you more about processes. Though it is not necessary to know more about processes at this point when you have just started writing network applications, it will help you in the long run.

A Process Example

Let us give you an example of how an operating system allocates resources to a process. Suppose you have a PC with 4GB of RAM installed. When someone says they have a 4GB RAM installed in their PC, they refer to the size of the memory module, not the storage space. This memory is used to temporarily store data and program codes so that it can be processed by the CPU.

Additionally, it is important to note that RAM is volatile memory, meaning the data stored in it will be lost if the computer is turned off or loses power. To store data permanently, computers also have non-volatile storage such as hard drive or solid state drive, commonly known as storage drive.

The CPU can only read and write to its registers and main memory. It cannot, for example, access the hard drive directly, so any data stored on it must first be transferred into the main memory chips before the CPU can work with it. The CPU never has direct access to the disk.

We’re sure you already know this stuffs. We still mentioned them to ensure that we were all on the same page.

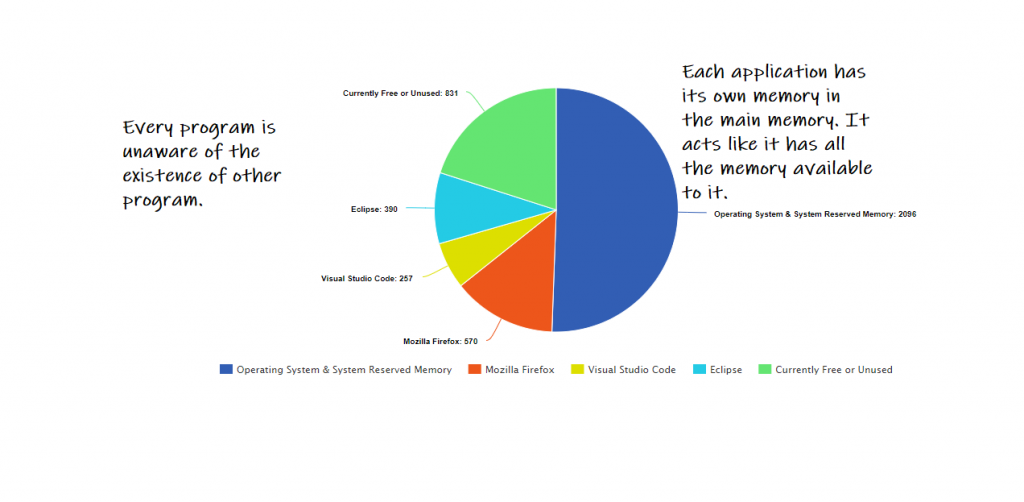

Let us return to our 4GB of RAM. Assume 2GB of RAM is being used to run the operating system and other system reserved memory. You have 2GB(2048 MB) of memory available to run applications such as browsers, text editors, IDEs, and so on. In the real world scenario will not be so simple. Various other factors will come to the picture and you will have less than 2048MB of memory in your disposal. But this is beyond the scope of this tutorial, even this course. Let us not not complicate things unnecessarily. We will assume that we have 2GB of RAM available to us.

Each process must be restricted to only access memory locations that “belong” to that process. We will explain in a minute why this restriction is necessary.

In computer architecture, for each process, a base register and a limit register are typically used to define a memory segment. A memory segment is a portion of memory that is set aside for a specific purpose, such as holding program code or data.

A base register contains the starting memory address of a memory segment. This address is also known as the “base” of the segment.

A limit register contains the size of the memory segment. This value is also known as the “limit” of the segment. It is used by the computer’s memory management system to ensure that a process cannot access memory outside of its assigned segment.

Together, the base register and the limit register define the range of memory addresses that a process is allowed to access. Any attempt to access memory outside of this range will result in a memory protection fault, which is a type of error that occurs when a process tries to access memory that it is not authorized to use.

What will happen if User processes aren’t restricted to only access memory locations that “belong” to that process? In other words, what happens if one process can access the memory of another?

If one process has access to another’s memory, it can potentially cause a number of problems, including:

Security issues: If a malicious process is able to access another process’s memory, it could potentially steal sensitive data, such as login credentials or personal information. It could also inject malware or other malicious code into the other process, which could then propagate and cause further damage.

Data corruption: If one process modifies the memory of another process without proper synchronization, it can cause data corruption and lead to unexpected behavior or crashes.

Interference: If one process is able to access another process’s memory, it could interfere with the normal operation of that process, causing it to behave in unexpected ways or to crash.

Resource depletion: If one process has access to another process’s memory, it could consume resources that the other process needs, such as memory or CPU time, which can cause the other process to slow down or become unresponsive.

To prevent these issues, operating systems use memory protection mechanisms, such as segmentation and paging, to ensure that each process only has access to its own memory. Additionally, memory protection is also enforced by the hardware, where the processor has a memory management unit (MMU) that checks the memory accesses and ensures that they are valid.

The OS obviously has access to all existing memory locations because swapping users’ code and data in and out of memory is required. It should also be obvious that changing the contents of the base and limit registers is a privileged operation that only the OS kernel is permitted to perform.

Sandboxing

Operating system employs a security technique called Sandboxing. Sandboxing is used in operating systems to isolate a program or process from the rest of the system. It creates a “sandbox” or a protected environment in which the program can execute without having access to sensitive system resources or data.

The idea behind sandboxing is to limit the potential damage that a malicious or malfunctioning program can cause. By isolating the program from the rest of the system, the sandbox prevents it from modifying or accessing sensitive data, opening network connections, or executing harmful actions. Sandboxing also allows the operating system to monitor and control the program’s behavior, enabling it to detect and stop any malicious activity.

The next big question is – if all processes are “protected,” how will they communicate when they truly need to?

Processes sometimes need to and they can communicate with each other and share resources (the objective of this tutorial), such as data or files. The operating system provides mechanisms for inter-process communication, such as pipes and shared memory, to allow processes to work together. This course does not cover the entire mechanism of how processes communicate with one another. The exact description can be found in any Operating System reference book. We will only talk about it briefly in the next tutorial.

The operating system also uses a process scheduler to determine which process should be executed next. This is based on factors such as the process’s priority, how much CPU time it has already used, and how long it has been waiting.

In summary, in operating systems, a process is a program that is being executed and managed by the operating system, it has a unique ID and resources, it can communicate with other processes and the operating system schedules the execution of the process.